History Log Terminal Analysis

Specification document History Log Terminal

Background & Strategic Fit

Terminal has always been the close friend to developers. We may overlook the terminal as only to work on a daily basis, but it turns out we can personalize person behavior based on their dump history terminal log.

Problem Statement

While our applications have always grown because of market demand, we intend to give the best solution and support to our customers at minimum time. Many of our issues are solved from machine to machine connection, which means they must use either linux, windows or even macOS.

Manual log analysis depends on the proficiency of the person running the analysis. If they have a deep understanding of the system, they may gain some momentum reviewing logs manually. However, this has serious limitations. It puts the team at the mercy of one person. As long as that person is unreachable, or unable to resolve the issue, the entire operation is put at risk.

Specification

History log data from terminal Operating System.

Goals

-

Personalize the terminal history to reflect behavior user.

-

Comply with security Policies, Regulations & Audits

-

Automate task

Success Metrics

-

Transform unstructured terminal history to structural data.

-

Analysis data log.

Requirements

-

Shell terminal

-

Hardware that running an operating system

-

Dump history into txt file

-

Append timestamp

Analysis

Types of structured data analysis

- Pattern Detection and Recognition

refers to filtering incoming messages based on a pattern book. Detecting patterns is an integral part of log analysis as it helps spot anomalies.

-

Log Normalization

is the function of converting log elements such as IP addresses or timestamps, to a common format. -

Classification and Tagging

is the process of tagging messages with keywords and categorizing them into classes. This enables you to filter and customize the way you visualize data.

Security Consideration

Do you have especially sensitive data via HTTP like credit card number, that may require HTTPS? In log system we don’t intend to log specific data like password or credentials, or credit card numbers.

Do you require authentication & authorization on some features, and is everything by default blocked,unless authorized?

No

Financial Consideration

Since this will add the number of characters we store in the log, we probably need to check the impact of cost log. The cost is related to the number of characters we write.

Data Tracking, Data Analytics & Marketing Integration Consideration

Does this feature require additional monitoring & tracking? How about mobile tracking, web tracking? It’s important that tracking requirements be identified from Day 1.

Log data should not be track, however we may need some kind of analytics dashboard to integrate with our logging system.

Operation Consideration

Does this feature introduce new behavior that needs to be communicated to customers? new features / changes used by the operation team?

This improvement should be announce to engineer team whom interact with log per daily basis.

Design

System Design

Extract challenges

The data extraction part of the ETL process poses several challenges. A lot of the problems arise from the architectural design of the extraction system:

Data volume.

The volume of data extraction affects system design. The solutions for low-volume data do not scale well as data quantity increases. In this study will use more than 2000 rows of data, with size 3,3 gigs.

Source limits

We used private sources from system apps. The dataset was downloaded from Harvard’s Dataverse and contains logs from an Iranian ecommerce site (zanbil.ir)

Transform

Data cleaning.

Data cleaning involves identifying suspicious data and correcting or removing it. For example:

-

Remove missing data

-

Trim data

Load

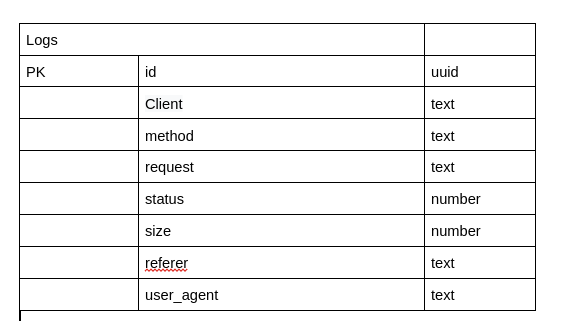

Schema database